Table of Contents

- Introduction

- Understanding Snort3 Architecture

- The Rise of AI in Network Security

- Snort3’s Native ML Capabilities

- Integrating Agentic AI with Snort3

- Real-World Integration Patterns

- Implementation Guide

- The Future: Autonomous Security Operations

- Conclusion

Introduction

The convergence of traditional IDS/IPS technologies with AI-based systems will mark a major paradigm shift in the continuously evolving field of Cybersecurity.

Snort3 (the third version of the world’s most broadly adopted Open-Source IDS/IPS) was architected to provide extensibility and intelligence.

In this article, we will explain how the modular architecture of Snort3 can synergize with today’s AI and Agentive AI Systems for Threat Detection, Response, and Autonomous Security Operations.

We will cover both the technical architecture of Snort3 and how autonomous AI agents can add value to Network Security Operations through practical integration models.

What You Will Learn

- Snort3’s modern, pluggable architecture

- Native machine learning capabilities (SnortML)

- Integration patterns with AI/ML systems

- Agentic AI workflows for autonomous threat response

- Practical implementation strategies

Understanding Snort3 Architecture

Snort3 represents a complete architectural overhaul from Snort 2.X, designed for modern multi-core processors, cloud environments, and critically, AI/ML integration.

Core Architectural Components

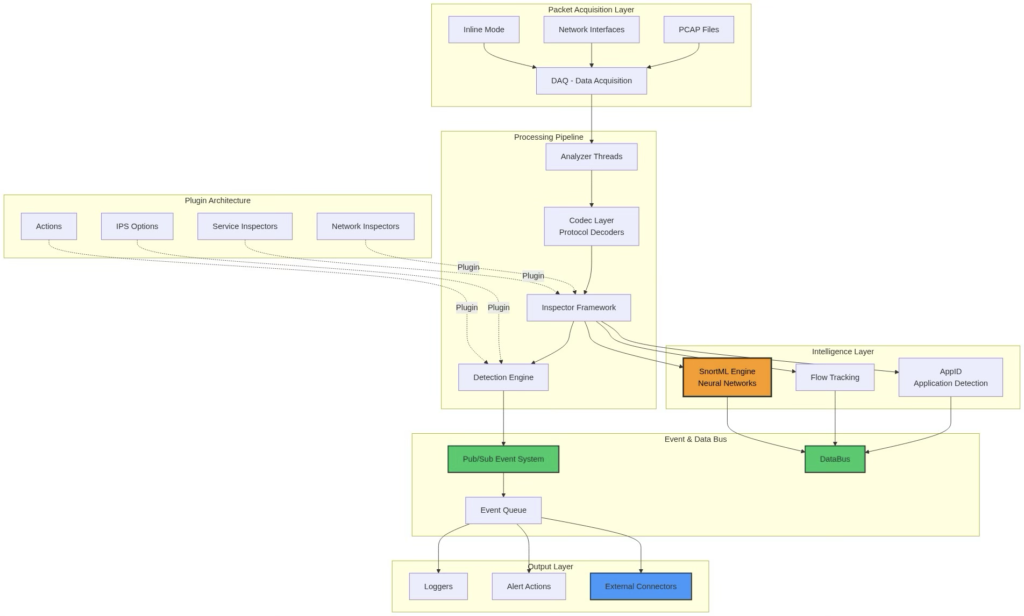

Figure 1: Snort3 Architecture Overview with plugin framework and event bus

Key Architectural Advantages for AI Integration

1. The ability to use Multi-Threading: Snort3 has native multi-threaded (multiple) packet processing through multiple asynchronous threads. Each of these threads runs independently from the others while sharing one common configuration. This makes it ideal for many parallel AI inference workloads.

2. A Pluggable Framework for Inspectors: Custom inspectors may be created in the form of shared libraries. AI/ML models can also be loaded into the environment as an inspector plugin. Also, this plugin model allows you to load new plugins on the fly without requiring the need for a full system restart.

3. Event-Driven Pub/Sub Model: Allows real-time events to be streamed out to external systems. The decouple nature of the architecture supports multiple consumer types that can subscribe to different event types.

4. A Shared Network Map & Flow Tracking: Provides contextual data to AI models, Bidirectional flow analysis, Application Layer Intelligence via AppId.

The Rise of AI in Network Security

Traditional signature-based detection has fundamental limitations:

- Zero-day attacks: The attacker does not have a signature in a database to match.

- Polymorphic malware: Since the malware changes each time it is run, the pattern of the malware will not be recognized on an individual basis.

- False positive rates: Too many false positives can overwhelm the ability of the IT team to effectively investigate each event.

- Manual rule creation: The amount of time it takes to create a manual rule based upon the behavior of a threat is too much to create rules at the rate of new threats that are being created.

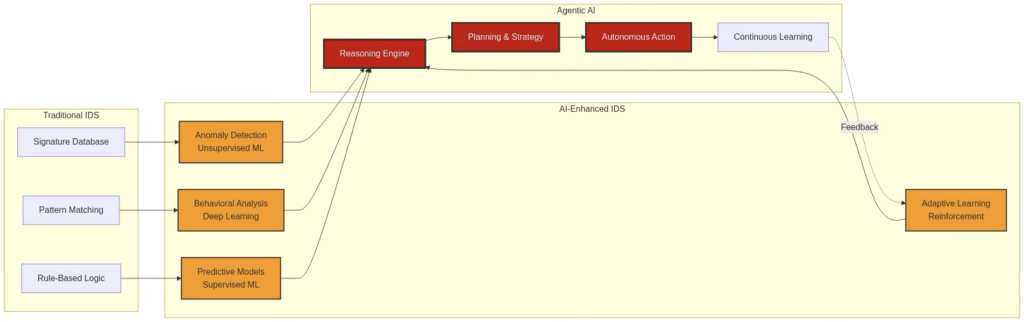

Below Architecture utilizes Artificial Intelligence (AI) and Machine Learning to address the above limitations:

Figure 2: IDS Technology evolution for traditional, AI-enhanced, and autonomous systems

Benefits of AI Integration

| Capability | Traditional IDS | AI-Enhanced IDS | Agentic AI IDS |

| Known Attack Detection | Excellent | Excellent | Excellent |

| Zero-Day Detection | Poor | Good | Excellent |

| Behavioral Analysis | Limited | Good | Excellent |

| Automated Response | Basic | Moderate | Advanced |

| Contextual Understanding | None | Limited | Comprehensive |

| Autonomous Decision Making | None | None | Advanced |

| Self-Improvement | None | Manual | Continuous |

Snort3’s Native ML Capabilities

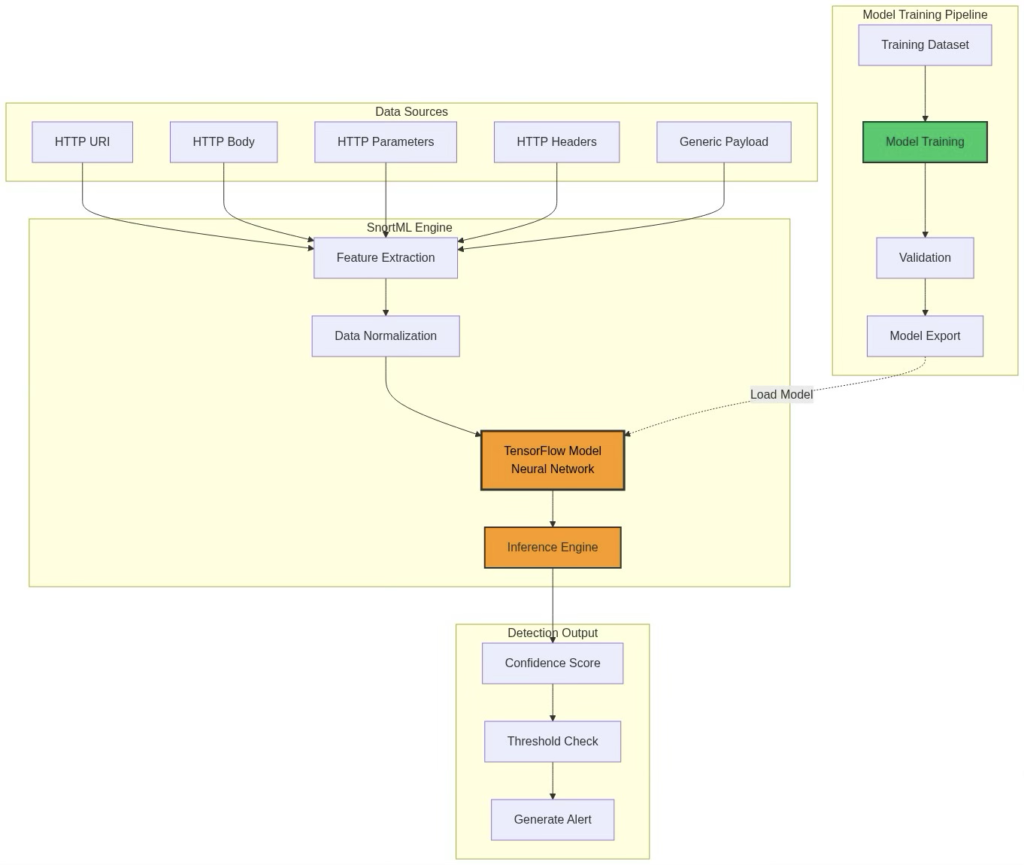

One of Snort3’s groundbreaking features is SnortML, a built-in neural network-based exploit detector using TensorFlow.

SnortML Architecture

Figure 3: SnortML Engine for feature extraction, model training and inference workflow

SnortML Configuration Example

# Global engine configuration

snort_ml_engine = {

http_param_model = { ‘models/http_param_exploit_detector.pb’ },

http_uri_model = { ‘models/http_uri_exploit_detector.pb’ },

}

# Per-policy configuration

snort_ml = {

# Enable HTTP URI inspection

uri_depth = -1, # -1 = unlimited depth

# Enable HTTP body inspection

client_body_depth = 10000, # First 10KB

# Confidence threshold (0.0 – 1.0)

threshold = 0.85,

}

How SnortML Works

- Feature Extraction: HTTP requests will be parsed, and features that identify a potential threat will be identified and categorized.

- Normalization: Once the features have been identified, they will be normalized so that the features can be input into the neural network.

- Inference: A real-time prediction will be made by the neural network via the TensorFlow model

- Scoring: There will be an output confidence score (0.0 – 1.0) based on the neural network’s prediction

- Decision: If the confidence score is greater than or equal to the threshold, then there will be a Snort alert issued.

Key Advantage: The most significant advantage of SnortML is its ability to recognize and classify novel attacks that it was not trained to detect during its initial training, thanks to its ability to learn the characteristics and patterns of all types of attacks.

Integrating Agentic AI with Snort3

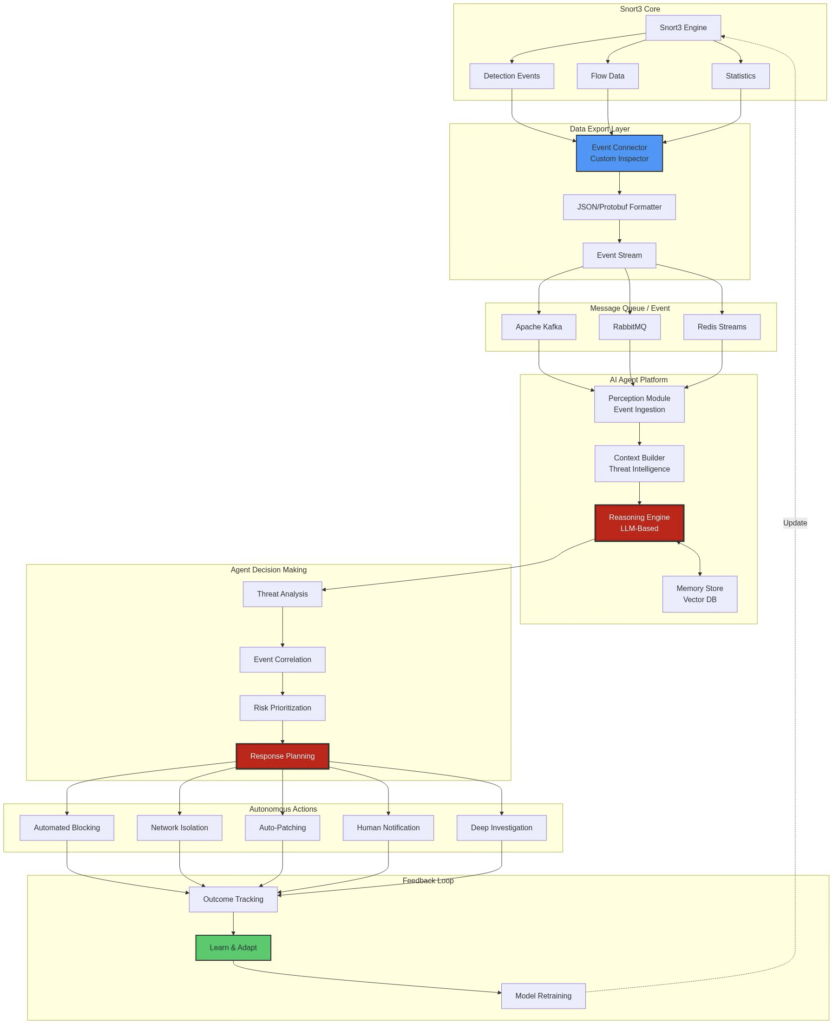

Agentic AI represents a step in the development of Autonomous AI agents, which evolve from reactive machine learning (ML) models, and to autonomous, reasoning, and decision-making systems. An AI Agent is able to perceive the environment (i.e., Network Traffic, Alerts), reason about threats and context, and plan responses, and take autonomous actions, as well as learn from the outcome of those actions.

Conceptual Integration Architecture

Figure 4: End-to-End AI Agent Platform Integration with Snort3 Event Pipeline

Real-World Integration Patterns

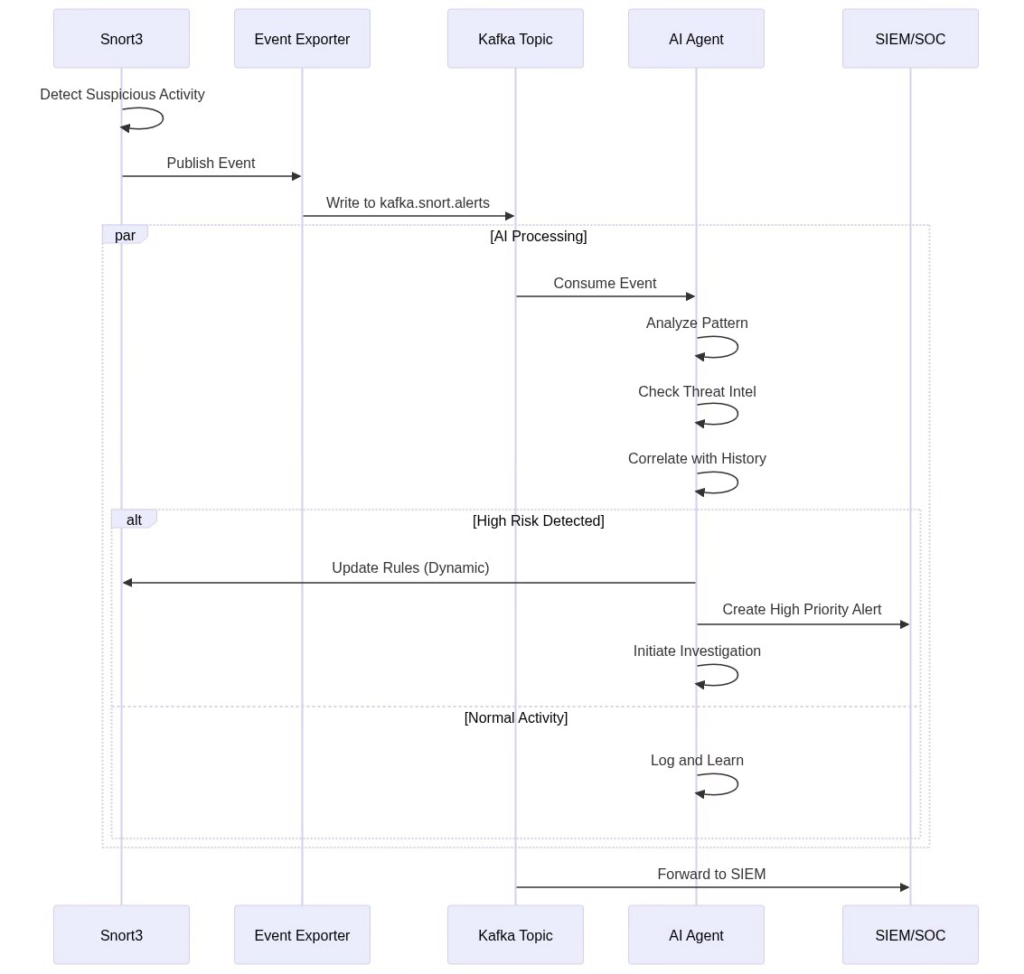

Pattern 1: Event Stream Processing

Use Case: Real-time anomaly detection and behavioral analysis:

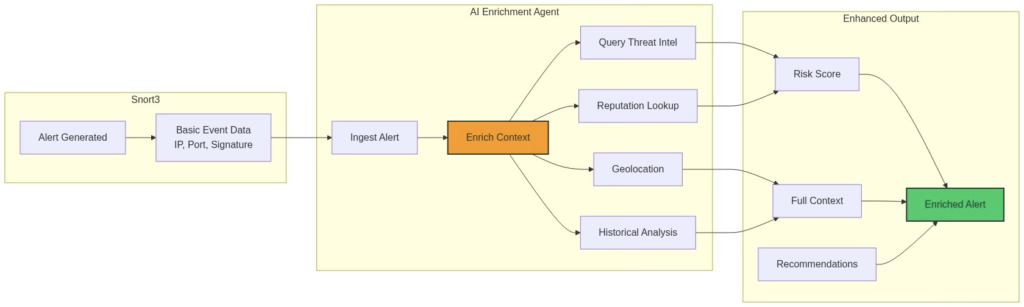

Figure 5: Threat Intelligence enrichment and context building process

Implementation:

// Custom Event Exporter Inspector

class AIEventExporter : public snort::Inspector {

public:

void eval(snort::Packet* p) override {

// Extract event data

EventData event = extract_event_data(p);

// Serialize to JSON/Protobuf

std::string json = serialize_event(event);

// Publish to Kafka

kafka_producer->send(“snort.alerts”, json);

}

};

Pattern 2: Contextual Enrichment

Use Case: Adding threat intelligence and context to alerts

Figure 6: Kafka-Based event processing sequence for autonomous threat response

Pattern 3: Autonomous Response Loop

Use Case: Self-healing security with autonomous mitigation

# Agentic AI Response System (Pseudo-code)

class SecurityAgent:

def __init__(self):

self.llm = LargeLanguageModel(“gpt-4”)

self.snort_api = Snort3API()

self.firewall_api = FirewallAPI()

self.memory = VectorDatabase()

async def process_alert(self, alert):

# Perception: Understand the threat

context = await self.build_context(alert)

# Reasoning: Analyze using LLM

analysis = await self.llm.analyze(

prompt=f”””

Analyze this security alert:

{alert}

Context:

{context}

Determine:

1. Severity (1-10)

2. Attack type

3. Recommended actions

4. False positive probability

“””

)

# Planning: Decide response strategy

if analysis.severity >= 8:

plan = await self.create_response_plan(analysis)

# Acting: Execute autonomous actions

if plan.requires_blocking:

await self.firewall_api.block_ip(alert.src_ip)

await self.snort_api.add_suppression(alert.signature_id)

if plan.requires_investigation:

await self.deep_dive_investigation(alert)

# Learning: Store outcome

await self.memory.store(alert, analysis, plan)

async def build_context(self, alert):

# Query historical data

similar_alerts = await self.memory.find_similar(alert)

# Query threat intelligence

threat_intel = await self.query_threat_feeds(alert.src_ip)

# Query network topology

network_context = await self.get_network_context(alert)

return {

‘history’: similar_alerts,

‘threat_intel’: threat_intel,

‘network’: network_context

}

Implementation Guide

Step 1: Build Custom Snort3 Event Connector

Create a custom inspector that exports events to external systems:

// ai_event_connector.h

#ifndef AI_EVENT_CONNECTOR_H

#define AI_EVENT_CONNECTOR_H

#include “framework/inspector.h”

#include “framework/module.h”

#include <kafka/KafkaProducer.h>

#include <json/json.h>

class AIEventConnectorModule : public snort::Module {

public:

AIEventConnectorModule();

bool set(const char*, snort::Value&, snort::SnortConfig*) override;

bool begin(const char*, int, snort::SnortConfig*) override;

private:

std::string kafka_brokers;

std::string kafka_topic;

};

class AIEventConnector : public snort::Inspector {

public:

AIEventConnector(const AIEventConnectorConfig& config);

void eval(snort::Packet*) override;

void show(const snort::SnortConfig*) const override;

private:

void export_event(const snort::Packet*, const snort::Event*);

Json::Value build_event_json(const snort::Packet*, const snort::Event*);

std::unique_ptr<KafkaProducer> kafka_producer;

AIEventConnectorConfig config;

};

#endif

// ai_event_connector.cc

#include “ai_event_connector.h”

#include “detection/detection_engine.h”

#include “flow/flow.h”

void AIEventConnector::eval(snort::Packet* p) {

if (!p || !p->flow)

return;

// Check if packet has alerts

auto* context = snort::DetectionEngine::get_context();

if (!context || context->events.empty())

return;

// Export each event

for (const auto& event : context->events) {

export_event(p, &event);

}

}

void AIEventConnector::export_event(

const snort::Packet* p,

const snort::Event* event

) {

// Build JSON event

Json::Value json_event = build_event_json(p, event);

// Serialize and send to Kafka

Json::StreamWriterBuilder builder;

std::string json_str = Json::writeString(builder, json_event);

kafka_producer->send(config.kafka_topic, json_str);

}

Json::Value AIEventConnector::build_event_json(

const snort::Packet* p,

const snort::Event* event

) {

Json::Value root;

// Timestamp

root[“timestamp”] = get_timestamp();

// Event IDs

root[“signature_id”] = event->sig_id;

root[“generator_id”] = event->gen_id;

root[“revision”] = event->revision;

// Network 5-tuple

char src_ip[INET6_ADDRSTRLEN];

char dst_ip[INET6_ADDRSTRLEN];

p->ptrs.ip_api.get_src()->ntop(src_ip, sizeof(src_ip));

p->ptrs.ip_api.get_dst()->ntop(dst_ip, sizeof(dst_ip));

root[“src_ip”] = src_ip;

root[“dst_ip”] = dst_ip;

root[“src_port”] = p->ptrs.sp;

root[“dst_port”] = p->ptrs.dp;

root[“protocol”] = p->get_ip_proto_next();

// Flow data

if (p->flow) {

root[“flow_id”] = p->flow->flowstats.client_pkts;

root[“flow_state”] = p->flow->ssn_state.session_flags;

// AppID information

if (p->flow->application_protocol) {

root[“application”] = p->flow->application_protocol;

}

}

// Packet payload (first N bytes for ML analysis)

if (p->data && p->dsize > 0) {

size_t payload_size = std::min((size_t)p->dsize, (size_t)1024);

std::string payload_hex = bytes_to_hex(p->data, payload_size);

root[“payload_hex”] = payload_hex;

root[“payload_size”] = p->dsize;

}

return root;

}

Step 2: Configure Snort3 Lua

— snort.lua

ai_event_connector = {

kafka_brokers = “localhost:9092”,

kafka_topic = “snort.security.alerts”,

export_payload = true,

max_payload_size = 1024,

}

— Enable SnortML

snort_ml_engine = {

http_param_model = { ‘/opt/snort3/models/http_exploit_detector.pb’ },

http_uri_model = { ‘/opt/snort3/models/uri_exploit_detector.pb’ },

}

snort_ml = {

uri_depth = -1,

client_body_depth = 10000,

threshold = 0.80,

}

Step 3: Implement AI Agent Consumer

# ai_security_agent.py

import asyncio

import json

from kafka import KafkaConsumer

from langchain import OpenAI, PromptTemplate

from langchain.vectorstores import Pinecone

from langchain.embeddings import OpenAIEmbeddings

class SnortAIAgent:

def __init__(self):

self.consumer = KafkaConsumer(

‘snort.security.alerts’,

bootstrap_servers=[‘localhost:9092’],

value_deserializer=lambda m: json.loads(m.decode(‘utf-8’))

)

self.llm = OpenAI(model=”gpt-4″, temperature=0.1)

self.embeddings = OpenAIEmbeddings()

self.vector_store = Pinecone(

embedding=self.embeddings,

index_name=”security-incidents”

)

self.prompt_template = PromptTemplate(

input_variables=[“alert”, “context”, “history”],

template=”””

You are an expert cybersecurity analyst AI agent.

Current Alert:

{alert}

Network Context:

{context}

Similar Historical Incidents:

{history}

Analyze this alert and provide:

1. Severity Score (1-10)

2. Attack Classification

3. Confidence Level (0-100%)

4. Recommended Actions

5. Reasoning

Format your response as JSON.

“””

)

async def run(self):

print(“AI Security Agent started…”)

for message in self.consumer:

alert = message.value

await self.process_alert(alert)

async def process_alert(self, alert):

print(f”Processing alert: SID {alert[‘signature_id’]}”)

# Step 1: Build context

context = await self.build_context(alert)

# Step 2: Find similar historical incidents

history = await self.find_similar_incidents(alert)

# Step 3: LLM-based analysis

analysis = await self.analyze_with_llm(alert, context, history)

# Step 4: Decide and act

await self.execute_response(alert, analysis)

# Step 5: Store for future learning

await self.store_incident(alert, analysis)

async def build_context(self, alert):

# Query threat intelligence APIs

threat_intel = await self.query_virustotal(alert[‘src_ip’])

# Get geolocation

geo = await self.query_geolocation(alert[‘src_ip’])

# Get network topology info

topology = await self.query_network_topology(

alert[‘src_ip’],

alert[‘dst_ip’]

)

return {

‘threat_intel’: threat_intel,

‘geolocation’: geo,

‘topology’: topology

}

async def find_similar_incidents(self, alert):

# Create embedding of current alert

alert_text = f”””

Source: {alert[‘src_ip’]}

Destination: {alert[‘dst_ip’]}

Signature: {alert[‘signature_id’]}

Payload: {alert.get(‘payload_hex’, ”)[:200]}

“””

# Vector similarity search

similar = self.vector_store.similarity_search(

alert_text,

k=5

)

return similar

async def analyze_with_llm(self, alert, context, history):

prompt = self.prompt_template.format(

alert=json.dumps(alert, indent=2),

context=json.dumps(context, indent=2),

history=json.dumps([h.page_content for h in history], indent=2)

)

response = await self.llm.agenerate([prompt])

analysis = json.loads(response.generations[0][0].text)

return analysis

async def execute_response(self, alert, analysis):

severity = analysis[‘severity_score’]

if severity >= 8:

# High severity – autonomous action

print(f”HIGH SEVERITY ALERT: {severity}/10″)

print(f”Classification: {analysis[‘attack_classification’]}”)

print(f”Confidence: {analysis[‘confidence_level’]}%”)

if analysis[‘confidence_level’] >= 85:

# Auto-block with high confidence

await self.block_ip(alert[‘src_ip’])

await self.notify_soc(alert, analysis, urgency=’high’)

else:

# Notify human for verification

await self.notify_soc(alert, analysis, urgency=’review’)

elif severity >= 5:

# Medium severity – log and monitor

print(f”MEDIUM SEVERITY: {severity}/10″)

await self.add_to_watchlist(alert[‘src_ip’])

else:

# Low severity – log only

print(f”LOW SEVERITY: {severity}/10″)

async def block_ip(self, ip_address):

# Call firewall API

print(f”Blocking IP: {ip_address}”)

# Implementation: firewall_api.block(ip_address)

async def store_incident(self, alert, analysis):

# Store in vector database for future reference

incident_text = f”””

Timestamp: {alert[‘timestamp’]}

Source IP: {alert[‘src_ip’]} -> {alert[‘dst_ip’]}

Signature ID: {alert[‘signature_id’]}

Severity: {analysis[‘severity_score’]}

Classification: {analysis[‘attack_classification’]}

Actions Taken: {analysis[‘recommended_actions’]}

Outcome: [To be updated]

“””

self.vector_store.add_texts([incident_text])

print(f”Incident stored for future learning”)

if __name__ == “__main__”:

agent = SnortAIAgent()

asyncio.run(agent.run())

Step 4: Deploy and Monitor

#!/bin/bash

# deploy_ai_snort.sh

# Start Kafka

docker-compose up -d kafka zookeeper

# Start Snort3 with custom plugin

snort -c /etc/snort/snort.lua \

–plugin-path /opt/snort3/lib/plugins \

-i eth0 \

-D

# Start AI Agent

python ai_security_agent.py &

# Monitor

tail -f /var/log/snort/alerts.json

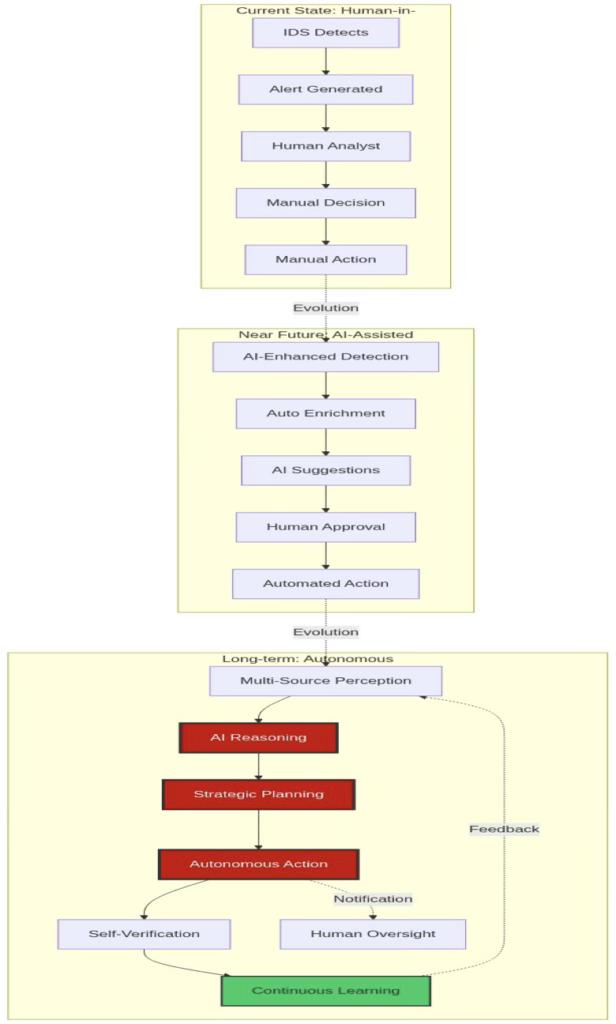

The Future: Autonomous Security Operations

The convergence of Snort3 and Agentic AI points toward a future of truly autonomous security operations:

Figure 7: Security operations evolution from the current state to full autonomy

Vision: Autonomous Security Operations Center (ASOC)

Imagine a security operations center where:

- AI Agents: Continuous monitoring of Snort3 telemetry data.

- LLM-based reasoning: AI understands complex attack chains.

- Autonomous response: Systems respond to threats as they occur.

- Self-learning models: Models improve without any Human Intervention.

- Human experts: Focused on Strategy, Not Tactical Response.

Key Technologies That will Make This Future

- Large Language Models (LLMs): GPT-4, Claude, Gemini for reasoning.

- Reinforcement Learning: Agents will learn the optimal responses.

- Graph Neural Networks: Understanding network topology.

- Federated Learning: Collaborative learning across organizations.

- Explainable AI: Understanding why agent made the decisions.

Conclusion

The current architecture of Snort 3 is ready for that AI-driven future. The modern architecture of Snort 3 includes a pluggable design (which makes it easy to add new features), event-driven architecture (this enables users to create event handlers based on events), as well as native machine learning (ML) capabilities, which provide a solid base for developing the next generation of autonomous security systems.

Key Takeaways

Snort3 is AI-Ready: Native ML support, extensible plugin architecture.

Multiple Integration Points: Event streams, custom inspectors, pub/sub.

Agentic AI Potential: Autonomous detection, analysis, and response.

Future-Proof: Designed for continuous evolution and improvement.

Next Steps

- Experiment with SnortML: Train custom models for your environment using Snort ML.

- Build Event Connectors: Export Snort 3 data to other AI platforms using event connectors.

- Develop AI Agents: Start by adding value to alerts by enriching them, and move towards enabling autonomous response by developing AI agents.

- Join the Community: Contribute to open-source Snort3 AI initiatives.

Key Takeaways

Snort3 is AI-Ready: Native ML support, extensible plugin architecture

Multiple Integration Points: Event streams, custom inspectors, pub/sub

Agentic AI Potential: Autonomous detection, analysis, and response

Future-Proof: Designed for continuous evolution and improvement

Next Steps

- Experiment with SnortML: Train custom models for your environment

- Build Event Connectors: Export Snort3 data to AI platforms

- Develop AI Agents: Start with alert enrichment, progress to autonomous response

- Join the Community: Contribute to open-source Snort3 AI initiatives

Resources

- Snort3 GitHub: https://github.com/snort3/snort3

- Snort3 Documentation: https://www.snort.org/documents

- SnortML Guide: Included in distribution

- LangChain: https://python.langchain.com/

- OpenAI: https://platform.openai.com/